How sure are you that the training metrics you’re tracking actually provide valuable insights into improving your training program? Learn more about training evaluation in our latest article:

Closing the loop doesn’t end with implementing a training program. As an L&D leader, you need to uncover learning’s influence on behavior, performance, actions, and business goals.

By learning how to build a strong case for your learning initiatives, you’ll be able to see if it actually works and demonstrate its impact on the top and bottom lines. Thankfully, technology has unlocked access to easily capture tons of L&D data and information.

This data, when put to good use, provides significant insights to help understand both the benefits and gaps in your learning programs and, more importantly, help drive your learning strategy forward.

However, according to Brandon Hall Group, fewer than 25% of companies capture anything beyond very basic metrics, and only about 20% of companies are able to analyze a majority of their data in a meaningful way.

How come? It’s because they don’t have the proper metrics.

What learning metrics really matter?

In evaluating the effectiveness and impact of your training, it’s important to look at the right metrics — which are actionable metrics, not vanity ones.

Basically, vanity metrics are numbers that are nice to have but don’t actually provide much insight. They explain the current state of your L&D efforts but aren’t so helpful when it comes to achieving better business outcomes. Some examples of this are the number of active learners and course completions.

Actionable metrics, on the other hand, are numbers that provide valuable insights into your learning strategy because they tie specific actions to real-life results.

You can observe this from how many employees are able to perform better at work using what they learned, or even how effective they are collaborating within teams.

As you can clearly see, the latter is better because they measure the link between L&D metrics and organizational performance and the effectiveness of learning programs.

Now that you have an idea of what learning metrics really matter, it’s best to have a framework that can guide you through your evaluation.

Working forward with the Kirkpatrick Learning Model

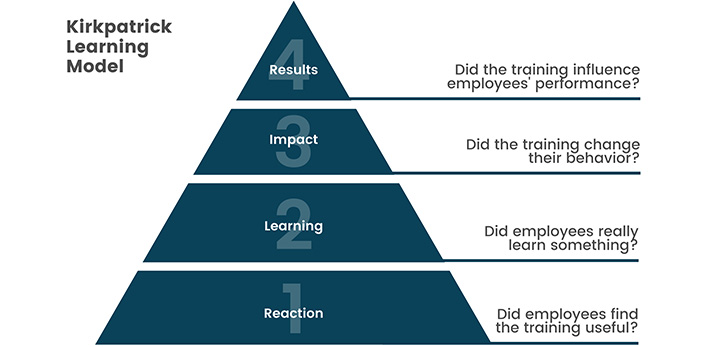

In the world of corporate training, there have been a series of learning frameworks people have used to assess the results of their L&D programs — the most commonly used being the Kirkpatrick Learning Model, known for its 4-level approach.

These are the four levels:

Reaction: How did employees react to the training they received?

With this level, you’ll get an insight as to how learners feel towards the training and if they found it useful. Here, you can use surveys, questionnaires, or talk to learners before and after the course to collect their feedback on the learning experience.

Learning: What did employees actually learn from training?

This level measures how much information was actually absorbed during the training. Common ways to measure this are by comparing pre-tests to post-tests and giving hands-on assignments that demonstrate the person learned a new skill.

Behavior: Did employees apply what they’ve learned to their jobs?

For the third level, you’ll discover and understand how the training has impacted the learner’s performance and attitude at work. To measure this, you can conduct self-assessment questionnaires, on-the-job observation, or even gather feedback from peers and managers.

Results: What did this changed behavior result in?

Using the knowledge and skills they’ve acquired, how has this affected bigger training goals? You can observe this through increased productivity and quality of work, improved business results such as closed deals, better marketing leads, and increased sales.

As a lot of companies have found, this is one of the most successful models that can help you measure the effectiveness of your company’s training program. Still, a lot of learning leaders seem to misuse it either by simply following it linearly or only applying one to two levels to their evaluation efforts.

The best way to use it? Having a clear idea of the result you want to achieve and then working backward on how to achieve it. Here’s what we mean.

Mapping Metrics to Actions

The easiest way to get started is to view the Kirkpatrick Learning Model as a part of your design process. When an evaluation plan is set in place from the very beginning phase of a training program, the easier it will be to monitor the metrics along the way and report them at the end.

To do this, you have to flip the framework; starting with level four and going in reverse. Here’s a step-by-step process to help you better understand it:

Start with Level 4: Results.

Using the framework as a guide, determine what goals you want to achieve by the end of your learning program, and then plan what actions you’ll take to make them happen. Say, you want to improve the way employees hold themselves better before their peers and clients. And perhaps your key metric here is the confidence of your employees.

Work backward, taking Level 3: Behavior.

Continuing the example, you believe employees will be more confident when they communicate efficiently and more effectively. This can be through how they speak with customers, how they collaborate within diverse teams, or even how they hold presentations.

Go to Level 2: Learning.

To help them learn those skills, you now create a solution around having better communication in the workplace — language learning. In this stage, you can be more specific with how you want to implement this training such as connecting these language skills to business-related skills. With this, their learning stays relevant and applicable.

End with Level 1: Reaction.

Think about how employees might respond to the kind of training you want to carry out. It’s likely more important that they understand the importance of language skills for work, and are bought in on using them.

The Takeaway

Notice how for each level, we considered actionable metrics more than vanity ones. That’s because numbers and figures may be good for measuring training activities, but what you want to really be on the lookout for is whether all this training has produced results on-the-job.

That said, are you now more equipped to better evaluate your training program? Let us know what steps you plan to take next in the comments!